NEPI Engine – AI Management System

Introduction

This document describes NEPI’s AI Model Management (AIM) system, AIM AI model required file content and structure, as well as AI model deployment and use within the AIM system. The AIM system provides a framework for storing, connecting, and running AI models from an onboard AI model library, as well as publishing AIM results to downstream consumers like a NEPI automation script of connected control system. The AIM system manages which of the available AI models is active at a given time, which sensor stream is provided as input to the active model, and any additional parameters related to AI model execution. The AIM system also supports external AI process orchestration such as active model selection and on-the-fly data input switching. The AIM system supports AI model output logging and ensures AI model outputs adhere to a standard ROS 2D label topic format, ensuring a consistent interface for both on-board and off-board consumers of the AIM data output streams.

NOTE: This document is written for NEPI solution developers working within the NEPI File System. For instructions on accessing and customizing the NEPI File System installed and running on a device, see the tutorials:

https://nepi.com/nepi-tutorials/nepi-engine-accessing-the-nepi-file-system/

https://nepi.com/nepi-tutorials/nepi-engine-customizing-the-nepi-file-system/

NOTE: For NEPI users that are only interested in developing, deploying, and using custom NEPI compatible AI models into their systems, the NEPI Engine tutorial “Creating Custom AI Models” would be a good place to jump to after a high-level review of this document. The “Creating Custom AI Models” tutorial is available in the Solution Development Tutorial section at: https://nepi.com/tutorials/

NEPI AIM System Overview

At the heart of NEPI’s AIM system is an image classification AI framework wrapped in a custom ROS node and configuration management system. The AIM system works with pre-trained AI detector models stored in an on-board library of installed AI detector models. The AIM system manages AI detector model startup and setup based on a configuration file, as well as in-situ AI detector data input and settings configuration through set of ROS services as illustrated the figure below.

AIM ROS Node

The NEPI AIM ROS node is an application wrapper built around industry standard AI inference frameworks that manages model loading, image connections, real-time configuration, and supported data output streams. AI models, configuration, and connecting image input sources are managed through a ROS topics.

AIM ROS Topics

| ROS Topic | Description |

| start_classifier | Accepts three inputs: an image source topic to connect to the ai classifier model, the ai classifier model name to load, and a detection threshold value. |

| stop_classifier | Accepts the ai classifier model name to stop. |

These AIM ROS AI model orchestration controls are also callable from NEPI automation scripts. For an example automation script that starts an AI detection process using these ROS topics, see the “ai_detector_config_script.py” script available at:

https://github.com/numurus-nepi/nepi_sample_auto_scripts

If you need to add additional AIM AI frameworks to your NEPI device, you can access and edit the ASM ROS Node source-code located in the NEPI File System folder:

/home/nepi/nepi_base_ws/src/nepi_edge_sdk_ai/

NOTE: See the NEPI Engine tutorial NEPI File System Customizing for more information on customizing and compiling NEPI ROS node changes at:

https://nepi.com/nepi-tutorials/nepi-engine-customizing-the-nepi-file-system/

AIM AI Model Library

On bootup, the AIM system queries all the models stored in the AIM Onboard AI Model Library located on the user storage drive mounted in the NEPI File System at:

/mnt/nepi_storage/ai_models

This folder is also accessible and editable from a PC’s File Manager application connected to the NEPI user storage drive at:

smb://192.168.179.103/nepi_storage/ai_models

NOTE: Models deployed into the AIM’s AI Model Library must be supported by one of the AIM AI frameworks installed on your NEPI device and placed into the appropriate library subfolders for the specific AI framework you intend to run your models with. To see a list of AIM supported AI frameworks, required files, and AIM Model Library deployment folders, see the document “NEPI Engine – Supported AI Frameworks and Models” available at:

https://nepi.com/documentation/

AIM Config Files

The AIM leverages a configuration file that directs the initial configuration of the AIM parameters; which, if any, AI detector is started on NEPI device boot, which input image the Detector is applied to, and the detection threshold control parameter. The NEPI device provides a default factory configuration and supports a “user” configuration override that allows system developers to create a user-defined AI detector startup configuration file. The configuration framework also offers a “reset to factory” option to revert back to the AIM’s factory settings.

The AIM Factory Config File, along with the Linux symbolic link that controls whether the factory or user config file is loaded, is located in the NEPI File system at:

/opt/nepi/ros/etc/nepi_darknet_ros_mgr/

If user settings have been saved on the system, the AIM User Config File, along with most NEPI user config files is located on the NEPI network shared user storage drive at:

/mnt/nepi_storage/user_cfg/ros/nepi_darknet_ros_mgr.yaml.user

This folder is also accessible and editable from a PC’s File Manager application connected to the NEPI user storage drive at:

smb://192.168.179.103/nepi_storage/user_cfg/ros

The AIM user configuration settings can be adjusted and saved through ROS configuration topics or the NEPI devices Resident User Interface (RUI) as described in the NEPI Engine AI Application tutorial available at: https://nepi.com/tutorials/

AIM Input Streams

The AIM system accepts image streams from standard ROS image topics, which NEPI’s sensor and processing systems produce natively. Input image topics data can include color images, black and white images, depth images, automation processed images, or any other type of image that is currently being published as ROS topic the NEPI device. While the AIM system only allows one AI detector model to be running at a given time and only one ROS image topic to be connected to that detector, it does support in-situ image topic switching. Additionally, NEPI’s built in Sequencer application provides a means to create a custom sequenced ROS image topic from multiple image topics on available on the NEPI device. Learn more about NEPI’s built-in Sequencer application under the Built-In Applications tutorial section at: https://nepi.com/tutorials/

AIM Output Topics

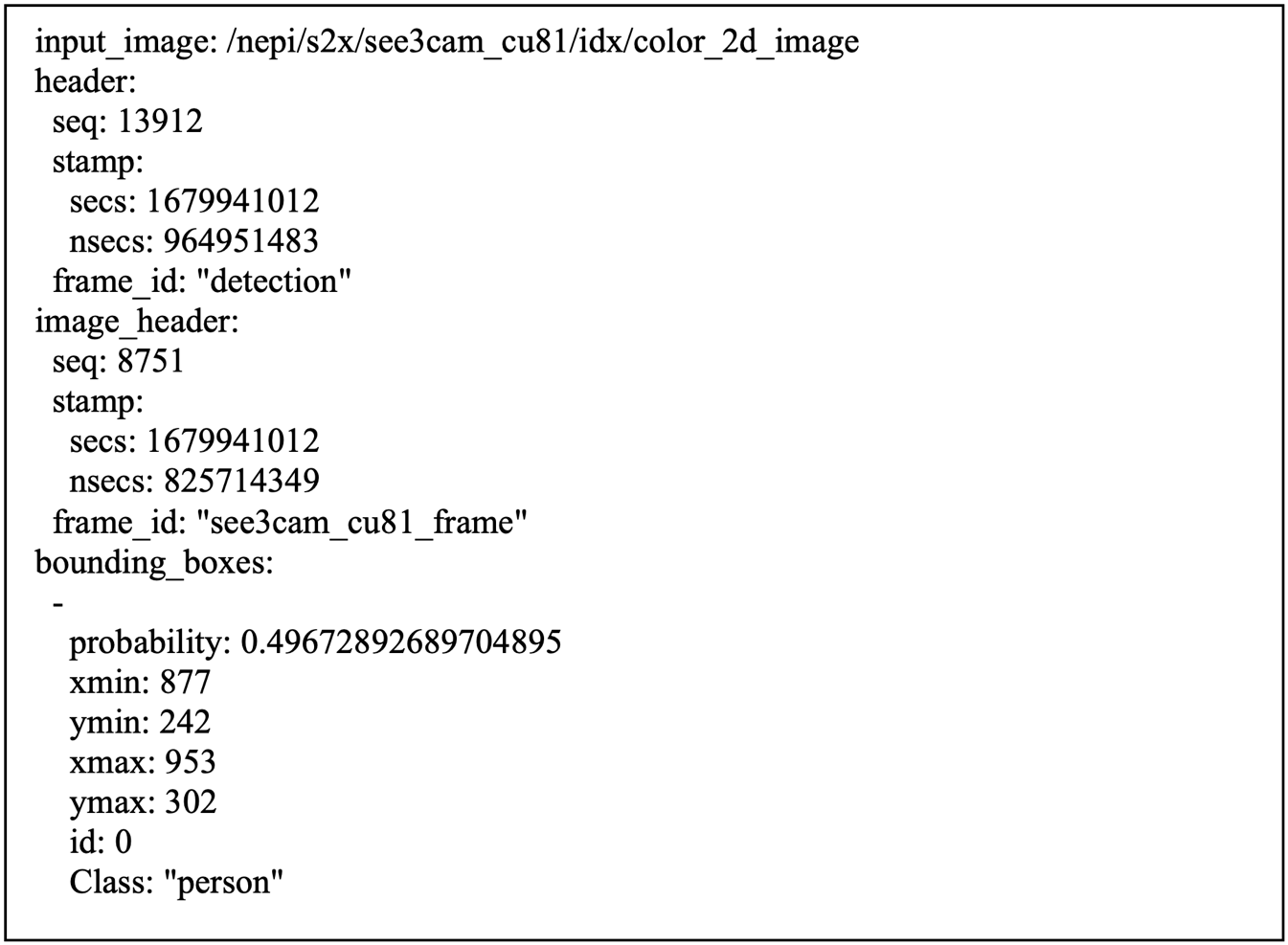

The AIM publishes AI detector output data stream that include an Detection Image stream with bounding boxes and labels overlayed on input source image for any detected objects found in the image, a custom intuitive textual Bounding Box message that includes the image input data source, pixel boundaries of each detected object’s bounding box along with the corresponding label object identifier and detection score (i.e., 0.0 – 1.0 probability score), and a custom Found Object message that includes the number of objects that were detected for each input image processed. These output data streams are published as ROS topics and available to downstream consumers of the AIM output data.

AIM ROS output messages are published under the “<basename>/classifier” ROS namespace:

<basename>/classifier/bounding_boxes

<basename>/classifier/detection_image

<basename>/classifier/found_object

By default, AIM output Bounding Box messages are saved by NEPI’s data logging system whenever global data saving is enabled. The Bounding Box messages are saved as text file. An example of an AIM Bounding Box output text file is provided below.

NOTE: While Bounding Box messages are only published if at least one object is detected in the image, both the Detection Image and Found Object message streams are published for every processed image.

Saving AIM Output Data On-Board

The AIM supports the NEPI device data logging interface, which allows enabling/disabling the AI detector output storage to disk, setting subdirectories and file name prefixes, and adjusting the maximum save rate. By default, the sensor-produced AI detector input image files are saved in the same location, allowing access to the source data from which the Detector output was derived.

AIM ROS Topics and Services

For more information on AIM ROS topics and services, see the NEPI Engine File System Interfacing API Manual at: https://nepi.com/documentation/

Supported AI Frameworks

NOTE: To see a list of AIM supported AI frameworks, see the document “NEPI Engine – Supported AI Frameworks and Models” available at:

https://nepi.com/documentation/

NOTE: You can download example NEPI AIM compatible AI model files from:

https://www.dropbox.com/scl/fo/dc9epsfeewgtxxkrd2mli/h?rlkey=dhqd9ljkg2k113b6us8n15acd&dl=0

Darknet AI Framework

The Darknet framework is flexible and can run many Deep Neural Network (DNN)-based 2D image Detectors as long as the deployed AI detector configuration files adhere to the standards defined in this API. Information and details on the Darknet AI framework are available at: https://github.com/AlexeyAB/darknet

NOTE: Darknet, itself, provides a Detector-training framework that will produce configuration files in the appropriate format for AIM deployment and use. Alternatively, there are many available open-source tools to translate DNN-based Detectors developed in some other framework (Tensorflow, Keras, etc.) to a Darknet-conformant scheme.

The Darknet AI Framework is located in the NEPI File System at:

/home/nepi/nepi_base_ws/src/nepi_edge_sdk_ai/nepi_darknet_ros/darknet

The NEPI Darknet AI Framework source-code is available from the NEPI GitHub repository at:

https://github.com/numurus-nepi/darknet

Model Training

Custom AIM AI detectors must adhere to the Darknet configuration format, which includes a network description file to specify the topology of the DNN along with a separate weights file to describe the DNN node weights. In a typical training scenario, the network topology is designed prior to training, and the weights file is the output of the training exercise. The format and contents of these files are described in Darknet documentation. For more information on training a Darknet AI detector models, a good reference is the Darknet AI detector training guide at:

https://pjreddie.com/darknet/train-cifar/

Whenever possible, it is suggested that a new Detector to be deployed to a NEPI device is trained using Darknet’s built-in and well-documented training system. If a DNN-based AI detector that was trained using a different framework with non-conformant network and weights file formats is to be deployed, a translation step is required. The exact steps for this translation depend on the source framework and file format, so cannot be described in detail here. The following link provides some guidance on selecting opensource converters based on input and output type, hence the most relevant entries in the matrix are along the Darknet row.

https://ysh329.github.io/deep-learning-model-convertor/

In some instances, there is no direct translator between the source format and the Darknet format. In those cases, it is necessary to perform a two-stage translation, with an intermediate representation. The Open Neural Network Exchange (ONNX) is a common intermediate representation for this purpose.

Model Files

A NEPI AIM deployable Darknet AI model package consists of three files: a custom “.yaml” specification file, an AI training produced “.cfg” network configuration file, and AI training produced “.weights” file that contains the neural net weights. Each of these files should have the same name with the appropriate file type extension.

NEPI Deployable AI Model Required Files

| File | Description |

| model_name.yaml | Specifies the names of the model config and weights files (see items 2 and 3 in this list), initial threshold value, and the mapping between numeric detection IDs and detection class names. |

| model_name.cfg | Describes the YOLO neural network configuration (layer topology, etc.) |

| model_name.weights | Large binary files that include the weights of trained neural net |

While the “.cfg” and “.weights” files are automatically generated as part of the AI model training process, the “.yaml” file, which provides the AIM system with details about the model, is manually created as part of the NEPI deployment process.

Example: Darknet “.yaml” AIM configuration file contents for the “yolov3-tiny” 2D image “common object detector” model

NOTE: The “names:” list section of this file example has been abbreviated to reduce space.

# This file provides the config file schema for the yolov3-tiny 2D image detector, and serves as an example.

# This file provides the config file schema for the yolov3-tiny 2D image detector, and serves as an example

# config file schema for all NEPI-managed 2D image detectors.

config_file_format: YAML

section_0:

header_text: yolo_model

section_0:

configurable_fields:

-

name:

default: yolov3-tiny.cfg

description: "Specifies the network configuration file"

read-only: true

type: String

header_text: config_file

section_1:

configurable_fields:

-

name:

default: yolov3-tiny.weights

description: "Specifies the network weights file"

read-only: true

type: String

header_text: weight_file

section_2:

configurable_fields:

-

value:

default: 0.3

description: "Specifies the default threshold for this image detector. Overridden at manager level"

max_value: 1.0

min_value: 0.01

read-only: true

type: Float

header_text: threshold

section_3:

configurable_fields:

-

names:

default:

- person

- bicycle

- car

- motorbike

- airplane

- bus

- train

- truck

- boat

- bench

- bird

- cat

description: "Ordered list of the detection classes trained for this image detector"

read-only: true

type: List

header_text: detection_classes

Model Deployment

Once you have the “.cfg”, “.weights”, and “.yaml” files ready for your new AI detector model, the last step before running and testing your model live is to copy them to the appropriate folder on your NEPI device’s AIM Model Library folder located on the user storage drive mounted in the NEPI File System at:

/mnt/nepi_storage/ai_models

This folder is also accessible and editable from a PC’s File Manager application connected to the NEPI user storage drive at:

smb://192.168.179.103/nepi_storage/ai_models

Copy your AI detector model files to the folders as shown in the table below.

NEPI AIM Model Library File Locations

| File | Description |

| “.yaml” file | nepi_storage\ai_models\darknet_ros\config |

| “.cfg” file | nepi_storage\ai_models\darknet_ros\yolo_network_config\weights |

| “.weights” file | nepi_storage\ai_models\darknet_ros\yolo_network_config\weights |

Custom AI Models

For a detailed tutorials on custom AI model training and deployment, see the NEPI Engine “Developing Custom AI Models” tutorial under the Solution Development Tutorials section at: